VL2's Dr. Lorna Quandt and Ms. Melissa Malzkuhn Win New $300,000 NSF Funding Award

VL2 team members Dr. Lorna Quandt and Ms. Melissa Malzkuhn have won a coveted NSF funding award for the project, “Signing Avatars & Immersive Learning: Development and Testing of a Novel Embodied Learning Environment.” The funding award is in the amount of $300,000.

Project Abstract

Improved resources for learning American Sign Language (ASL) are in high demand. Traditional educational materials for ASL tend to include books and videos, but there has been limited progress in using cutting-edge technologies to harness the visual-spatial nature of ASL for improved learning outcomes. Interactive speaking avatars have become valuable learning tools for spoken language instruction, whereas the potential uses of signing avatars have not been adequately explored.

The aim of this Early Grant for Exploratory Research is to investigate the feasibility of a system in which signing avatars (computer-animated virtual humans built from motion capture recordings) teach users ASL in an immersive virtual environment. The system is called Signing Avatars & Immersive Learning (SAIL).

The project focuses on developing and testing this entirely novel ASL learning tool, fostering the inclusion of underrepresented minorities in STEM. This work has the potential to substantially advance the fields of virtual reality, ASL instruction, and embodied learning.

This project leverages the cognitive neuroscience of embodied learning to test the SAIL system. The ultimate goal is to develop a prototype of the system and test its use in a sample of hearing non-signers. Signing avatars are created using motion capture recordings of native deaf signers signing in ASL.

The avatars are placed in a virtual reality landscape accessed via head-mounted goggles. Users enter the virtual reality environment, and the user's own movements are captured via a gesture-tracking system. A "teacher" avatar guides users through an interactive ASL lesson involving both the observation and production of signs.

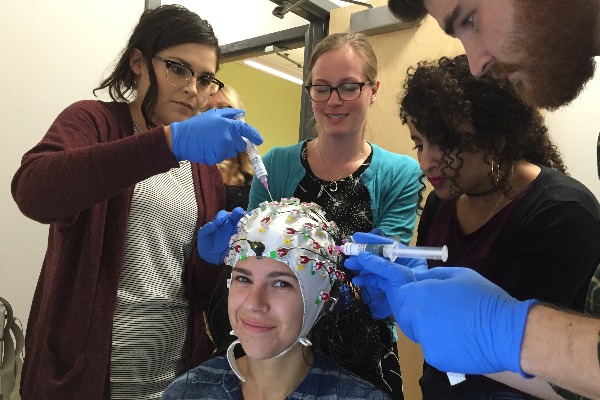

Users learn ASL signs from both the first-person perspective and the third-person perspective. The inclusion of the first-person perspective may enhance the potential for embodied learning processes. Following the development of SAIL, the project involves conducting an electroencephalography (EEG) experiment to examine how the sensorimotor systems of the brain are engaged by the embodied experiences provided in SAIL.

The extent of neural activity in the sensorimotor cortex during viewing of another person signing provides insight into how the observer is processing the signs within SAIL.

The project team pioneers the integration of multiple technologies: avatars, motion capture systems, virtual reality, gesture tracking, and EEG with the goal of making progress toward an improved tool for sign language learning.

This award reflects NSF's statutory mission and has been deemed worthy of support through evaluation using the Foundation's intellectual merit and broader impacts review criteria.

Dr. Lorna Quandt, Assistant Professor, Ph.D. in Educational Neuroscience (PEN) Program serves as the Principal Investigator and Ms. Melissa Malzkuhn (Creative Director, VL2 Motion Light Lab) is Co-Principal Investigator of the research project.