VL2 featured in Gallaudet research newsletter

Updates from VL2 and its hubs appeared in a "Research at Gallaudet" newsletter released in advance of the University's Research Expo on March 24, in which the Center will participate and showcase its latest research and translation projects.

Below is the content that appeared in the newsletter, which is available in PDF form here.

Work continues on Petitto RAVE project

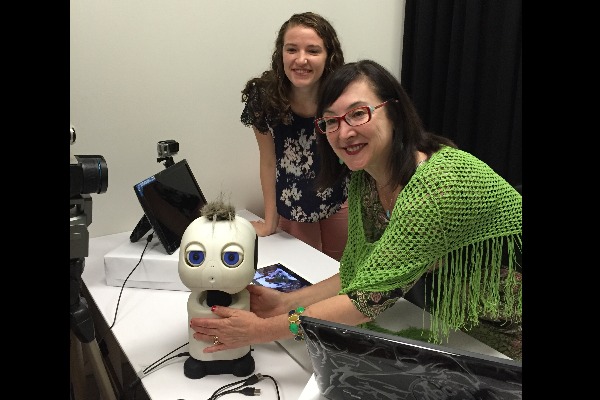

Dr. Petitto with MakiDr. Laura-Ann Petitto, principal investigator, and her team are making great advances in Petitto’s Brain and Language Laboratory for Neuroimaging, BL2, on a Robot-Avatar Thermal-Enhanced (RAVE) prototype project funded by Keck Foundation and National Science Foundation INSPIRE grants.

Petitto is co-principal investigator and science director for the Center on Visual Language and Visual Learning, VL2, and scientific director of BL2.

Petitto and her team are working to combine multiple technologies to identify when babies are in a cognitive-emotional state that makes them most ready to learn, triggering a virtual human to provide rhythmic-temporal nursery rhymes in sign language via avatars created using motion capture.

This project is underpinned by core experiments being conducted by Petitto, her researchers, and Ph.D. in educational neuroscience students in the BL2 lab at Gallaudet University. It has significant potential for supporting early visual language exposure for deaf babies and helping prevent language deprivation and its lasting adverse neurological impacts.

In its first year of work, the project team focused on stimuli design, stimuli creation with motion capture, and integrating recording technologies in the BL2 lab. Petitto, with her BL2 team, is executing this integration of fNIRS neuroimaging, eye-tracking, and thermal infrared imaging equipment to synchronize acquisition of data from babies. Collaboration between Petitto’s team and Dr. Arcangelo Merla’s team at the University of d’Annunzio, Cheti, in Italy, successfully linked the three recording technologies through a central computer and conducted several pilot runs with infants.

Drawing on scientific findings by Petitto and BL2 researchers, Melissa Malzkuhn, VL2 Digital Media and Innovation manager and creative director of the Motion Light Lab, ML2, worked with Dr. David Traum at the University of Southern California’s Institute for Creative Technologies to prepare Virtual Human software. They configured a three-dimensional model for movement, seeking to advance more humanlike movement production, that will be driven by motion capture by Gallaudet’s MoCap team, led by Petitto and Malzkuhn.

During the week of February 22, the team gathered in BL2’s laboratory to train with Maki, a robot from Dr. Brian Scassellati’s Robotics Lab at Yale University. Petitto and her team had worked together with Scassellati to refine the hardware layout, integrate new components, and calibrate and test the software. The February training also included a pilot with an infant to test the full system.

As the project enters its second year, Petitto and her team are working to program the Virtual Human for greater sign-like fluency of movement, gather fNIRS+Thermal IR+MoCap-rhythmic temporal frequency data, and improve the prototype’s interaction with babies.

ML2 releases fifth bilingual storybook app

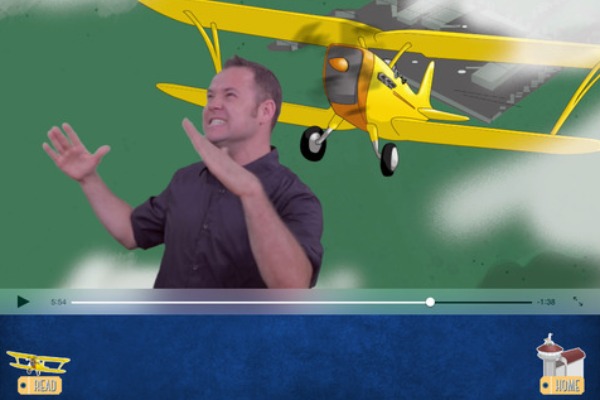

The Little Airplane That CouldML2 in November released its fifth bilingual storybook app, The Little Airplane That Could. The app adapts a classic children's story told in American Sign Language (ASL) through richly detailed illustrations.

All five storybook apps, available on the Apple iTunes store, are based on research from studies conducted in BL2. The apps offer young visual learners unique and interactive reading experiences in which animated illustrations and English text complement videos of a storyteller using ASL. Interactive features bring children from individual English words to ASL videos of corresponding signs as well as spoken English glossaries.

The Little Airplane That Could is set apart from the four earlier apps by its increased use of personification, a formal grammatical device common to all world languages but presented in uniquely rich ways found only in a visual signed language. In ASL, personification is conveyed through person and object referencing with role shifting. The ASL storyteller uses linguistically governed eye, head, and body movements to adopt personas and to become various characters and objects, bringing them to life in exceptionally visually detailed ways.

This inclusion of personification and role-shifting in ASL catapults young readers' engagement with visual imagery, which in turn increases story encoding, memory and recall, story comprehension, and the ability to analyze relations among complex story elements and characters.

First publication from PEN program

Gallaudet University’s new Ph.D. in Educational Neuroscience Program marked a major milestone with the first publication to come out of the program, in a peer-reviewed, open-access multidisciplinary research journal.

Adam Stone (lead author) and Geo Kartheiser, both third-year students, with Petitto and Dr. Thomas Allen, director of the VL2 resource hub Early Education Literacy Lab, EL2, published “Fingerspelling as a Novel Gateway into Reading Fluency in Deaf Bilinguals” in PLOS One.

The paper discusses the integral role of fingerspelling in bilingual ASL/English language acquisition and builds on research by Petitto on age of bilingual exposure as a strong predictor of bilingual language and reading mastery, as well as her discoveries about the underlying cognitive capacities that involve word decoding accuracy and word recognition automaticity.

Fingerspelling provides deaf bilinguals with important cross-linguistic links between sign language and written language, such as English, the authors write. In order to investigate these links, the authors used data from the VL2 Toolkit Psychometric Study from EL2, to investigate the relationship between age of ASL exposure, ASL fluency, and fingerspelling skill on reading fluency in deaf college-age bilinguals.

They found that fingerspelling, above and beyond ASL skills, contributes to reading fluency in deaf bilinguals. Fingerspelling, manually and in print, is mutually facilitating, providing greater accuracy in word decoding and automatic word recognition. Subjects’ rapid and accurate decoding ASL fingerspelling predicted rapid and accurate decoding of print words, suggesting a common, underlying decoding skill that develops in different modalities, the authors write.

These findings have significant implications for developing optimal approaches for reading instruction for deaf and hard of hearing children.

“This is a pioneering contribution to advancing science and translation,” Petitto said. “This paper advances findings that contribute to seeing the world of reading and to the opportunity to advance spectacular reading success in young deaf children.”

Dr. Peter Hauser, associate professor at the National Technical Institute of the Deaf at the Rochester Institute of Technology and a VL2 legacy scientist, also is a co-author.

VL2 to host knowledge fest May 9

VL2 and its four resource hubs will host a knowledge festival on Monday, May 9, 2016, at Gallaudet University.

During the event, VL2 researchers and staff, led by Petitto, will share with the community the work VL2 has accomplished since its establishment in 2006. They also will discuss the Center’s four resource hubs and the rich array of information and products these hubs offer to the nation and world, as well as ongoing and future projects with the potential to impact our understanding of how the human brain works and how babies learn language.

Exciting information will be shared about:

- Research conducted at BL2;

- Research and papers produced by teams of VL2 researchers at affiliate institutions and laboratories;

- The PEN program;

- Projects funded by grants, including Keck Foundation and National Science Foundation INSPIRE grants;

- Advances in educational research and a visual communications and sign language checklist, by EL2;

- Innovative products and projects at ML2;

- Translational work being done in the Translation for the Science of Language and Learning Lab, TL2;

- Collaborations with other universities and laboratories across the nation and around the world; and,

- Much more that VL2 and its hubs have to offer in years to come to Gallaudet and its mission, as well as to advance the well-being of deaf and hard of hearing people around the world.

More information will be shared at a future date.

- By Tara Schupner Congdon, Manager of Communications

*Note: This material is based upon work supported by the National Science Foundation under grant numbers 0541953 (VL2, first five years), 1041725 (VL2, second five years), and 1547178 (INSPIRE).